And here they are: the 2012-2013 award nominees!

Traitor - associating Concepts using the World Wide Web

By: The Evil Geniuses, a group consisting of Lesley Wevers, Oliver Jundt, and Wanno Drijfhout (University of Twente)

From the submission:

What do Apple, Samsung, Microsoft, IBM etc. have in common, if anything? What does Java have that PHP does not have? Which extra terms could refine the search query "lance armstrong"? What is the context of a sentence, such as "Food, games and shelter are just as important as health"? How can animals be organized in taxonomies? You may know the answers to these questions or know where to look for them - but can a computer answer these questions for you too? We suspect this could be the case if the computer had a data set of associated concepts. An example to illustrate why we have this suspicion: if we know that the concepts of the two companies Apple and Samsung are both frequently associated with the concept of a "phone", we can conclude that "phone" is something they have in common. Our idea is to test our suspicion with Traitor, a tool we developed that has a database of associated concepts and understands questions similar to our examples.[...]

A video report, courtesy of participating team

Babel 2012 - Web Language Connections

By: Hannes Mühleisen (Database Architectures group at the Center for Mathematics and Computer Science)

From the submission:

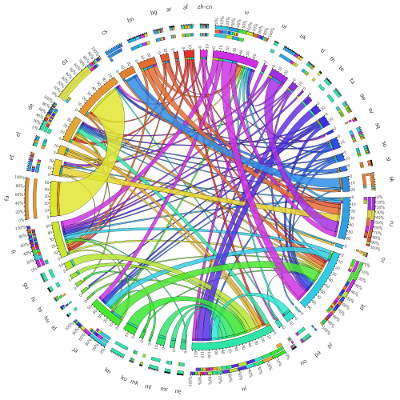

[...] However, many people speak more than one language, in fact, it has been estimated that over half of all people are bilingual [2]. On the web, this multilingualism may be reflected in a very particular way. People publish web pages, and set links to other pages. In most cases, the page being linked to will be written in the same language than the linking page. However, in many other cases it will not be. For example, many resources such as the english Wikipedia are more comprehensive than their counterparts in other languages. Hence, authors are often inclined to set a link to a page in a foreign language. The presence of such an inter-language link on the Web can indicate that the author of the page at least saw the possibility that his audience is able to read and understand the other language. By determining the language Web pages have been written in, finding the pages they link to, also determining their language, and counting these occurences, we can quantify the inter-language links on the web according to languages. Ultimately, this can give an indication as to which languages are commonly understood together in written form. [...]

Results visualised by participant Hannes Mühleisen

How readable is the web?

By: Marije de Heus (University of Twente)

From the submission:

The internet is used by wide variety of users. Research from the university of Maryland (D. Demner, 2001) indicated in 2001 that more than 7% of the internet’s users is below the age of 12 and more than 18% is below 18. Some texts are more easily readable by younger users than others. In general, texts that have longer sentences with longer words, containing more syllables are more complex than sentences with shorter sentences, consisting of short words. There are several measures to compute the readability, e.g. Flesh-Kincaid readability, Gunning Fog index, Dale-Chall readability, Coleman-Liau index, SMOG and the Automated Readability Index (Senter, 1967). A search engine could use information about the user (such as age and education level) in combination with the readability scores of websites to only return websites that are suitable for this user. [...]

A video report, courtesy of participant Marije de Heus

Toward Online Matching of Europe’s Job Market

By: Gebre Gebremeskel and Thaer Samar (Information Access group at the Center for Mathematics and Computer Science)

From the submission:

Our overall objective with this study is to investigate how the European job market could operate if people would really move freely throughout Europe. This idea has been inspired by the 2012 Nobel Prize in Economics being awarded to Gale and Shapley, for their research on the stable marriage problem and its implications for matching markets. Finding the stable marriage between online vacancies and social media profiles seemed the perfect challenge to approach while competing for the Norvig Web Data Science Award. [...]

The entropy of language

By: Bram Leenders and Menno Tammens (University of Twente)

From the submission:

Back in 1948, Shannon established his famous theory on (data) entropy. In this theory, he gives a formula of the unpredictability of data and, implicitly, the information contained in it. Severely summarized, it comes down to this: the entropy of data equals the unpredictability of data. Very unpredictable data (e.g. random numbers) have a high entropy. [...] Given our dataset, we chose to calcuate the entropy of language -not just English- as used on the Internet. The effect on our calculation is that we get an entropy of language as used by the average internet-user, misspellings included.[...]

App rankings

By: Koen Rouwhorst (University of Twente)

From the submission:

[...] For business it’s important to know how [mobile] apps are doing on different platforms and/or in different regions. There are a lot of companies that jumped into the market of mobile analytics in the past couple of years. They keep track of app store downloads and revenue and sell that information. This information is all based on historical data that was either provided by the store operators or gathered through tracking. Based on that data they can tell what are the most downloaded or best-rated apps overall or in a specific market. What all these mobile analytics companies don’t take into account is the voice of the web, e.g. site owners, bloggers, commenters and other participants. What I would be interested in is what are the most popular apps according to the web, or what are the most popular apps in a specific part (e.g. French speaking in Canada) of the web. But also how are the app stores, and thus the platforms, ranked around the World Wide Web, or in a specific market. [...]

An analysis of the use of JavaScript libraries on the web

By: Dennis Pallett, Marcel Boersma, Niels Visser, and Ties de Kock (University of Twente)

From the submission:

Using the SARA Hadoop cluster and the CommonCrawl data we have looked into the use of JavaScript libraries on the web and have determined the most popular libraries. First in section one we will discuss our idea in-depth and describe exactly what we want to do. In the second section we will describe our method to gather the data we need in order to get our results. In section three we present our results and discuss how we have produced the results. Finally in section four we discuss our results and present some conclusions. [...]

Footprint of the world top companies

By: Alexandre Marah and Ferenc Szabó (University of Twente)

From the submission:

Ranking lists of the worlds biggest companies always include metrics like market value, sales and profits, but wrongfully, the world wide web is not included in the process. The internet had became a new dimension to the existing ones: everything in the real world has it’s mark on the virtual one. This is the reason why we decided to measure the footprint of the worlds’ top companies. Using the footprints to determine new rankings should reflect their standings in existing lists, since more sales and profits mean that they affect the world (both the real and the virtual one) more and more.